THE GIST: Whether you like it or not, we will soon all rely on expert recommendations generated by artificial intelligence (AI) systems at work and in our personal lives. But out of all the possible options, how can we trust that the AI will choose the best option for us, rather than the one we are most likely to agree with? A whole slew of new applications is now being developed that try to foster more trust in AI recommendations, but what they are actually do is training machines to be better liars. We are inadvertently turning machines into master manipulators.

Have you ever wondered what it would be like to collaborate with a robot-colleague at work? With the AI revolution looming on the horizon, it is clear that the rapid advances of machine learning are poised to reshape the work place. In many ways, you are already relying on AI help today, when you search for something on Google or when you scroll through the Newsfeed on Facebook. Even your fridge and your tooth brush may be already powered by machine learning.

We have come to trust these invisible algorithms without even attempting to understand how they work. Like electricity, we simply trust that when we turn on the light switch, the lights will go on — no intricate knowledge of atoms, energy or electric circuits is necessary for that. But when it comes to more complex machine learning systems, there seems to be no shortage of pundits and self-pro claimed experts who have taken a firm stance on AI transparency, demanding that AI systems are first fully understood before they are implemented.

At the same time, big corporations are already eagerly adopting new AI systems to deal with the deluge of data in their daily business operations. The more data there is to collect and analyse, the more they rely on AI to make better forecasts and choose the best course of action. Some of the most advanced AI systems are already able to make operational decisions that exceed the capacities of human experts. So whether we like it or not, it’s time to get ready for the arrival of our new AI colleagues at work.

The future of human-machine collaboration

As in any other working relationship, the most essential factor for successful human-machine collaboration is trust. But given the complexity of machine learning algorithms, how can we be sure that we can rely on the seemingly fail-safe predictions generated by the AI? If all we have is one recommended course of action, with little to no explanation why this course of action is the best of all possible options, who is to say that we should trust it?

This problem is perhaps best illustrated by the case of IBM’s Watson for Oncology programme. Using one of the world’s most powerful supercomputer systems to recommend the best cancer treatment to doctors seemed like an audacious undertaking straight out of sci-f movies. The AI promised to deliver top-quality recommendations on the treatment of 12 cancers that accounted for 80% of the world’s cases. As of today, over 14,000 patients worldwide have received advise based on the recommendations generated by Watson’s suite of oncology solutions.

“IBM Watson could not explain why its treatment was better because its algorithms were simply too complex to be fully understood by humans.”

However, when doctors first interacted with Watson they found themselves in a rather difficult situation. On the one hand, if Watson provided guidance about a treatment that coincided with their own opinions, physicians did not see much value in Watson’s recommendations. After all, the supercomputer was simply telling them what they did already know. The allegedly superior AI recommendations did thus not change the actual treatment. At most, this may have given physicians some peace of mind, providing them with more confidence in their own decisions, but eventually did not result in improved patient survival rates.

On the other hand, if Watson generated a recommendation that strikingly contradicted the experts’ opinion, oncologists would conclude that Watson was not competent enough. For example, a Danish hospital reported to have abandoned Watson after discovering that its oncologists disagreed with Watson in over 66% of cases. In fact, even IBM Watson’s premier medical partner, the MD Anderson Cancer Center, recently announced it was dropping the programme for good.

When doctors disagreed, Watson was not able to explain why its treatment was plausible, nor how the AI programme arrived at its conclusion. Its machine learning algorithms were simply too complex to be fully understood by humans. This caused even more mistrust and disbelief, leading many physicians to ignore the seemingly “outlandish” machine learning recommendations and stick to their own expertise in oncology.

“If all we have is one recommendation, with little to no explanation why this recommendation is the best of all possible options, who is to say that we should trust it?”

Deep algorithmic aversion

Despite all the technological advancements, we still seem to deeply lack confidence in AI predictions. This can be explained by a combination of technological and psychological factors: the algorithmic complexity of AI systems, the fear of losing control of a situation and the anxiety of interacting with something we do not understand. This is reinforced by fairly common cognitive biases, such as confirmation bias and the somewhat irrational belief in human superiority over machines.

Usually, building trust with human co-workers implies repeated interactions that help us better understand our colleagues. For thousands of years, humans worked with each other through explanation and mutual understanding. If we can understand how the others think, we can form reasonable expectations about what they are going to do next. This understanding typically facilitates a psychological feeling of safety, resulting in a more open and collaborative work culture.

By contrast, when a supercomputer like IBM Watson produces an algorithmically-generated recommendation, this outcome is typically based on thousands of weak signals in the data and their interactions. In theory, there might be a detailed explanation, but it is often too difficult for humans to trace back. Even trivial AI recommender systems face the same problem, because they tend to provide recommendations out of a “black-box”. Too often, it is difficult to convey to human collaborators that the machine has learned the right lessons without being explicitly programmed to do so. As such, the complexity of AI decisions continues to challenge the human mind, provoking more scepticism and mistrust.

In machines we trust?

If AI is to live up to its full potential, we have to find a way to get people to trust it, particularly if it produces recommendations that radically differ from what we are normally used to.

There are two ways out of this crisis of confidence. The first one is more scientific: rigorous (medical) trials, experiments and measurements of outcomes that are causally linked to the new AI systems. This approach is widely used in applications, ranging from digital marketing to industrial production. But in many cases the costs of this approach are too high both in monetary and ethical terms, e.g. when expensive equipment is involved or when human life is at risk.

As a case in point, when IBM Watson suggests a new oncology treatment, its recommendations need to be heavily scrutinised. This involves recruiting patients, testing of results and the publication of studies in peer-reviewed scientific journals. It is easy to see that this approach may not be always feasible, especially in the context of time-sensitive medical decisions.

The other approach to building trust and confidence in machine predictions is through post-hoc explanation. In addition to the primary optimisation function, a secondary algorithm is implemented that generates detailed explanations of what is going on inside the machine, akin to a translator from machine speak to human speak. For example, the fintech “unicorn” Stripe uses this technique to make customers trust its anti-fraud decisions that are made by machine learning algorithms.

Similarly, the political technology start-up Avantgarde Analytics has recently begun implementing similar techniques to better explain its algorithms that target campaign messages to potential voters. In the interest of transparency, a secondary algorithm is tasked to provide detailed explanations to voters on how their data is being used during the election campaign.

Machiavellian machines

Another AI company, SalesPredict, went one step further by asking: can we generate not just any explanation, but the most effective explanation?

As early as 2014, they developed a recommender system for sales lead scoring that used machine learning to optimise for the plausibility of an AI explanation. In this case, the machine was instructed to optimise for the likelihood of its recommendation being accepted by a human collaborator.

Using reinforcement learning techniques, the AI considered people’s emotional perceptions and evolved the way it communicated its recommendations. If the human operator first rejects an AI recommendation, the machine will try again to come up with something that makes more sense to the human. In other words, the AI tries to design a more persuasive explanation for its human colleagues — regardless of what is actually going on inside the model. As Chief Data Scientist of SalesPredict writes, it was about getting the user excited, rather than producing the most accurate prediction.

“The danger of this approach is that by teaching the AI to identify a persuasive and plausible human-friendly explanation, we are teaching the machine to be a better liar.”

Even though this “workaround” works well in some contexts, it could result in some unintended social consequences. In particular, the danger of this approach is that by teaching the AI to identify a persuasive and plausible human-friendly explanation, we are essentially teaching the machine to be a better liar. And this is especially problematic if the machine is built on notions of influence and irrational decision-making from behavioural psychology.

Since the machine optimises for the probability of a solution being accepted by a human collaborator, it may prioritise what we want to hear over what we need to hear. This could produce overly simplified, human-digestible predictions that tend to forego potential gains in productivity. Just think about what happened when a Wired editor decided to like everything in his Facebook Newsfeed; after two days, the algorithm prioritised clickbait content until his feed consisted exclusively of cat videos and BuzzFeed lists.

There is a clear trade-off between AI explainability and efficiency that taps into a deepening sense of disquiet. Pushing for more human-friendly AI solutions might just as well imply making the AI less efficient and more insincere.

In other words, instead of asking “how can I find the most accurate and fair solution to this problem”, the machine would ask: “what do I need to say to make the human believe me?” Taken to its logical conclusion, what we might have accomplished here is building the next generation of supercomputers that are trained to expertly manipulate humans in the rush to gain acceptance and plausibility.

Red Flag Act in 2018?

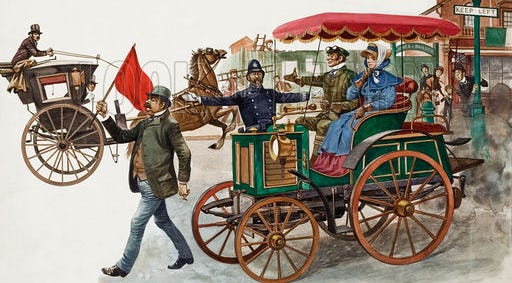

People have always been mistrustful of new technologies. But history shows that even the biggest sceptics eventually concede and get used to new technological realities. For example, in the early days of automobiles, British policy-makers introduced the Red Flag Act.

Red flag laws were laws in the United Kingdom and the United States enacted in the late 19th century, requiring drivers of early automobiles to take certain safety precautions. In particular, this act required cars to be accompanied by three people, and at least one of them was supposed to wave a red flag in front of the vehicle as a warning at all times. Of course, this act undermined the very purpose of an automobile in the most fundamental way (getting from point A to point B as fast as possible). But society simply did not trust these “horseless carriages” enough to allow them on the roads without these additional safety precautions.

The Red Flag Act was repealed 30 years later; not because automobiles became significantly safer in 1896, but simply because people got used to them. The crippling anxiety faded away and there was a collective desire to take advantage of all the benefits of driving a car. As history shows, moral panics are frequently followed by a more pragmatic approach to technology.

There are notable parallels here for the regulation of AI. Advantages offered by advances in machine learning will eventually outweigh the initial scepticism about its implementation. But if regulators continue to stubbornly press for more AI explainability, they could undermine the very potential of machine learning, akin to a new Red Flag Act for AI. In turn, the increased public scrutiny of algorithms could put the heat on developers to create more manipulative AI systems that produce acceptable but misleading explanations at scale.

“If we want AI to really benefit people, we need to find a way to get people to trust it.”

Obviously, this is not exactly the best starting point for a good working relationship between humans and machines. It takes a giant leap of faith to allow machines that are smarter than us into our lives; to welcome them into a realm of work historically led by humans; to allow them to shape our decisions, dreams and everyday experiences. In this future, trust is the most essential ingredient in virtually every type of human-machine collaboration.

The bad news is that it only takes one Machiavellian machine to shatter the foundation of trust. Intuitively, we all know that building trust is hard, while breaking trust is easy. But the good news is that it is possible to build more confidence in machine predictions if we learn from past mistakes, conduct proper experiments and judge AI systems fairly by the results they produce, rather than the explanations they come up with.